Deep fake examples, targeting and the Other Twitter Files

Plus some good news/bad news from the TIC Community

Welcome to new followers! It was great to meet some of you at the International Association of Business Communicators DC session on Tuesday, where I shared the evidence base for adopting a more relational, social change approach for curbing mis- and disinformation, plus some tips we all can use and – hopefully – inspiration for communicators, who are key players in addressing this pervasive problem. The video will be posted to IABC DC's YouTube page shortly; I'll share when it's up.

Breaking news from the field

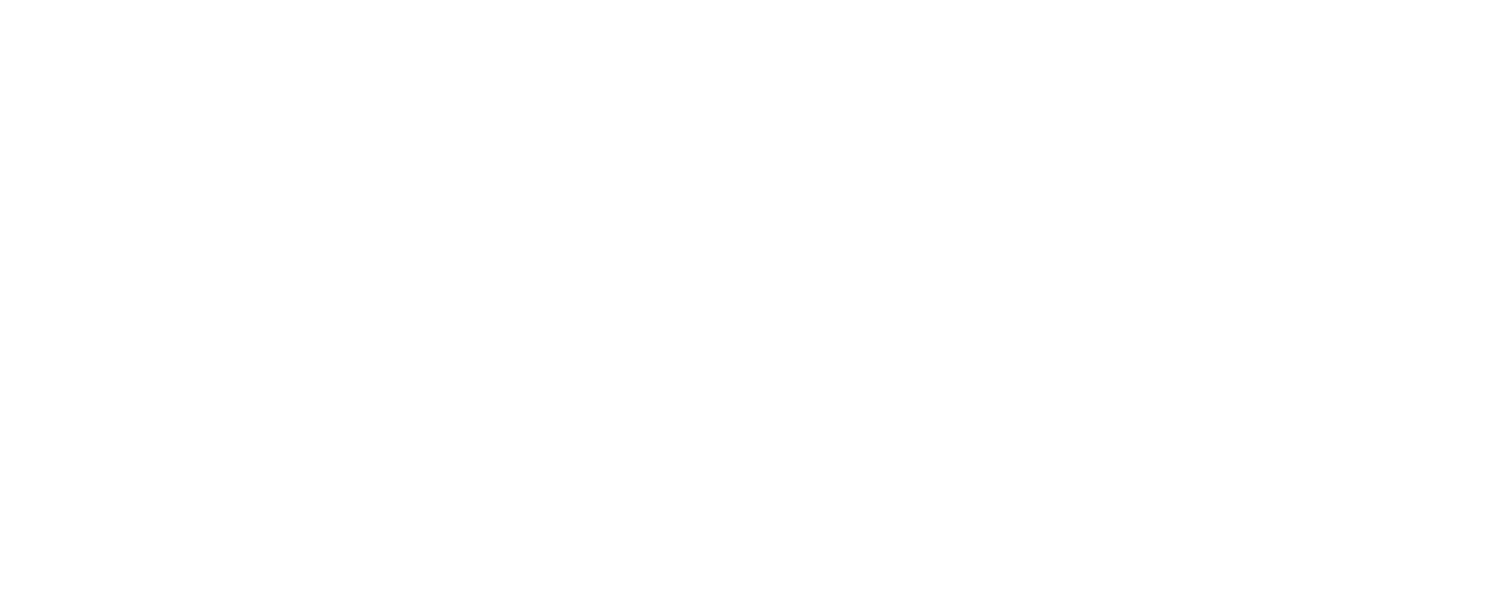

Deep fakes The New York Times just exposed one of the first published examples of an entirely fake broadcast outlet, complete with AI-generated commentators "speaking" to camera. The examples are compelling and the best way to understand deep fakes so I encourage you to take a look – it appears to be unlocked: https://www.nytimes.com/2023/02/07/technology/artificial-intelligence-training-deepfake.html?smid=nytcore-ios-share&referringSource=articleShare

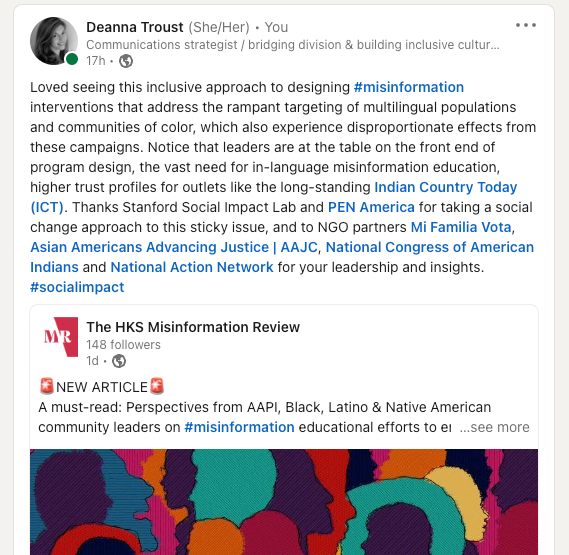

Harmful targeting Audience-specific content is the hallmark of any good communications or marketing effort, but disinformation purveyors are taking advantage of the micro-targeting ability social media platforms provide to share false and misleading content with specific populations. This article in the HKS Misinformation Review is excellent– here's the link and my comments:

Twitter Files and beyond FYI: Everyone badgered previous Twitter leadership about content, "censorship" etc. Grateful to Rolling Stones reporters for sharing the other side of the Twitter Files story – and exposing the clear political agendas that drove the exercise.

Twitter restricting access to the API This story's a bit technical for some but will affect our understanding of how mis- and disinformation spreads on social media. Today, Twitter is cutting off free access to its API (application programming interface). This means independent researchers like my former partners at NYU's Cybersecurity for Democracy (C4D) will have to pay for the data they use in their mis- and disinformation research. Given social media platforms' outsized impact on everything from mental health to democratic processes, we need independent research that holds platforms accountable for their practices – for example, research that exposed Facebook's poor track record in following its policies governing paid political ads or how its moderation of content promoting violence on January 6 was too slow to do much good.

The risk of actions like Twitter's – reminiscent of when Facebook deleted the C4D team's accounts to deter their work, in August 2021 – had already caused the independent research community to form the Coalition for Independent Technology Research, which issued an open letter to Twitter on February 6 and has established a mutual aid fund for their peers. Follow them on Twitter @transparenttech or visit independenttechresearch.org.

Twitter's decision to revoke access to their free API will severely disrupt thousands of public interest projects worldwide. @transparenttech is calling on Twitter to reverse this decision in a letter published today: https://t.co/UpSyd3S3sf

— Coalition for Independent Technology Research (@transparenttech) February 6, 2023

News nuggets from the Truth in Common community

That's it for today. Please drop a comment and let me know what's interesting and useful here, and what's not.