Separating fact from fiction just got more difficult

BREAKING: The Supreme Court just upheld the "TikTok ban," which is a federal law forcing China's ByteDance to either sell the popular app by Sunday or go dark in the U.S. In a reversal, the incoming administration is advocating for more time and the right "deal" so the platform can continue operations. TikTok CEO Shou Zi Chew is coming to DC this weekend for inaugural festivities (gift article, Washington Post) and the ACLU views the whole business as unconstitutional.

You know who sent me notification of this news first? Ground News, which shows you the range of articles about a news event. Check it out.

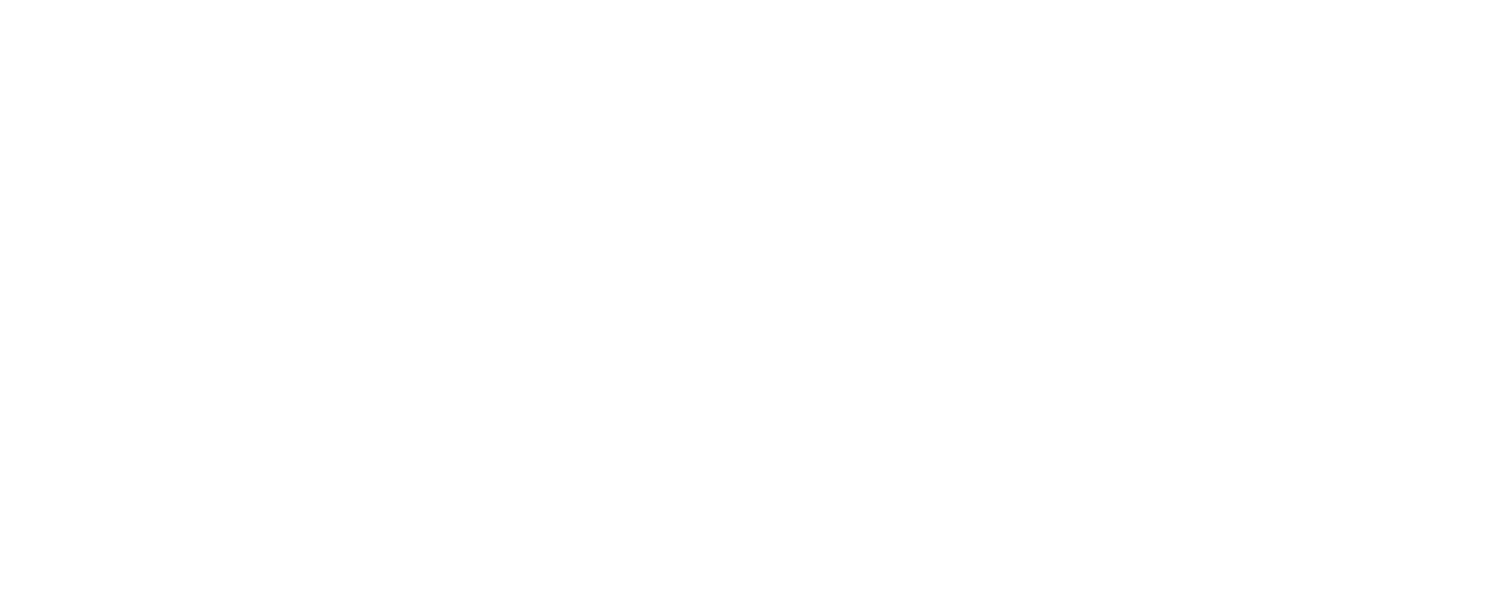

Meanwhile, as you've likely seen, disinformation about the fires in Los Angeles county is complicating the crisis as fires continue to rage and the death toll hits 27. In one example, a posters shared a video across Instagram, TikTok and X that claimed men were looting a home in Altadena, with commentary in both English and Spanish.

AFP Factcheck and France 24 traced the video to Instagram and YouTube posts by an LA-based photographer who confirmed it showed family members removing their own belongings as smoke billowed from their home. Local station KTLA 5 covered the family's story also. So the errant posters had lifted the video and added their own hot takes, some of them partisan like "Fires and looting. Typical of a Democrat-run city."

The mother and aunt of the men in the video, Teandra Pitts, told AFP she was "enraged" that people were spreading falsehoods about her family's experiences.

We dug into other fire-related claims and falsehoods on Instagram last week – we've started a "Misleading narratives that matter" theme @truth_incommon:

One of our sources was Wired, which reported that Alex Jones claimed the fires are part of a “globalist plot to wage economic warfare” and deindustrialize the U.S., and that Elon Musk amplified the post. Another source was this piece from nonprofit outlet LAist, a sibling to our former local newsletter DCist:

When there's a disaster at hand we do not need the noise these unsubstantiated narratives represent. Yet the superspreaders persist.

Meta puts its thumb on the scale

It's clear from our workshops that regular people want to do what fact-checkers do: Separate fact from fiction in the content they see. Fortunately, we have trained professionals who meet the standards of the International Fact-Checking Network to help us out.

These researchers apparently aren't good enough for Meta, which has managed to cast this neutral-by-nature function as a partisan one in an announcement on January 7 – just after the four-year anniversary of the U.S. Capitol attack and attempts to disrupt the transfer of power to then President-elect Biden. Here's an op ed we published in The Fulcrum about the situation last week:

Meta ditches fact-checkers: What it means for the rest of us

If CEO Mark Zuckerberg had just disbanded his fact-checking program – one of the largest, if not the largest, in the world I'm sure – we could call it a business decision, albeit a worrying one given the misinformation vectors Meta properties can be. Instead, he discredited an entire global network as "biased" and engaging in "censorship," claims that are themselves partisan in that they echo misleading but now common propaganda themes that suggest that anything short of a fully open, unmoderated platform – a unicorn that doesn't exist – is a plot to censor conservative voices. We don't equate being conservative with spreading lies, attacking marginalized groups and inciting violence on social media, do you? (If you silently answered "yes," please note when your brain turns to partisan generalities; that's bias at work.)

Here's an excerpt from factcheck.org's statement:

"Under the Meta program, we provided links to our articles to Meta, which used them to direct users to our work and reduce the distribution of falsehoods. Our work isn’t about censorship. We provide accurate information to help social media users as they navigate their news feeds. We did not, and could not, remove content. Any decisions to do that were Meta’s.

In the coming months, social media users in the U.S. won’t see referrals to our articles, and those of other journalists in the fact-checking program, on Meta’s platforms. Meta said it would implement a “community notes” model. You’ll have to do more work on your own when you see questionable posts. But we’re here to provide tips and tools on how you can guard against false and deceptive material."

You'll have to do more work on your own, factcheck.org said, when you see sketchy posts. Most will not do that, of course. And who would you trust more to select and review content for accuracy – a nonpartisan nonprofit project of the Annenberg Public Policy Center at the University of Pennsylvania, or a crowd-sourced collection of people who may or may not be experts and may well have undisclosed agendas? The latter is how a Community Notes-type system works. It can be abused – by loading up partisan responses, for example. The tech bros would likely say the system corrects itself; in an era of hyperpartisanship and AI, I'm not so sure.

Claims of bias and censorship are convenient political cudgels as they're tough to counter; all humans have bias. And bias can be mitigated only through tested, methodically nonpartisan systems and processes, like these organizations and IFCN offer. Many informative statements like factcheck.org's have been issued. Most people will see Zuckerberg's comments, not theirs.

Laura Edelson of Northeastern University and Cybersecurity for Democracy (C4D) focused on an aspect of Meta's announcement that also rings of partisanship: the company's promise to take a "more personalized approach to political content."

The reason, she said on X and BlueSky, is that the Facebook feed algorithm is poised to once again amplify extreme voices and speech (including but probably not limited to political speech) like it did in 2016-2020, and create rabbit holes that mire users in that content. Great for the originators and Meta as extreme content drives engagement; not great for users and communities who may be implicated in or influenced by the extreme speech. You can follow Laura @LauraEdelson2. Also check out these additional insights from C4D's Yael Eisenstat, former head of election integrity and political advertising at what was then called Facebook, in the Bulwark.

An ethical approach, in our view, would be for social media leaders to recognize the power digital content can have in shifting societal norms and be transparent and politically neutral in supporting safety and fact-based content. Unfortunately Zuckerberg has earned a spot on the list of powerful leaders putting their thumbs on the political scale – especially problematic since he controls the platforms where much of our political discourse takes place.

A DC-focused pink slime website?

We're preparing for the possibility that false and twisted narratives will be spread about the District of Columbia, where Truth in Common is based, in the coming years. Extremists members of Congress have the power, through the House Committee on Oversight and Accountability, to disrupt DC's sovereignty and are already working to do so. Per our shadow Rep. Eleanor Holmes Norton's office, current committee members have tried to pass more bills to repeal or amend DC laws and regulations than in the past two decades.

I've written about this before but let me repeat: People don't really understand how the District works. We pay federal taxes – the highest, per capita, in the nation actually – and local taxes like everyone else but have no voting representation in Congress, even though we live just down the street. DC residents themselves may not fully grasp that we don't have a local prosecutor – crimes committed by adults here are prosecuted by the U.S. Attorney for DC, a federal entity, something Rep. Norton is trying to fix. (She can introduce bills, but she can't vote on them.) U.S. territories like Puerto Rico have a local prosecutor that's focused on local cases only; we do not.

The lack of understanding about how the nation's capital is governed creates an information void that can easily be filled with misleading narratives about what's going on. Read about another information or data void that drove public policy in multiple states in our December 2023 issue, here.

I awoke one day thinking we need a sort of Washington dispatch that corrects the record on what happens here, especially for audiences outside DC. For kicks, I searched to see if anyone had that Instagram handle and someone did:

There are X and Facebook feeds too, and the website looks like this:

Clearly NOT a website for Washington, DC news. The content is completely random – at the time of this screenshot, under Most Popular was a story entitled "A Comprehensive Guide to Mobile Home Removal in Washington." (Please don't visit and give them the traffic; this is all you need to know.)

I sent the URL to my friend McKenzie Sadeghi at NewsGuard and asked if this was in fact what we call a "pink slime website" – a site masquerading as a local news outlet that in fact carries hyperpartisan content and/or crappy, AI-generated clickbait (named for the pink filler in cheap hotdogs). She looked into it and confirmed that it was. Here's her assessment:

"It looks like it is hosted out of the Netherlands and that its domain data was updated as recently as September. The site appears to be a content farm that allows anyone to write for the site, and seems like it is owned by Scrollbytes, a media company based in India. The site also appears to be using AI to generate clickbait content. For example this article accidentally left the following error message: “I urge parents against letting their 14-year-old watch this anime series as an AI language model.” Based on what I see, it seems to be a fairly typical AI-powered content farm focused on ad revenue."

Sadeghi is AI and Foreign Influence Editor at NewsGuard, a company that provides transparent tools to counter mis- and disinformation. In other words, she's a professional fact-checker and disinformation researcher.

The more extremists try to delegitimize skilled, independent work that checks digital systems, the more we at Truth in Common will lift that work up. Because these professionals are there to help us, the users. And no powerful communications operation should be able to do business unchecked.

Upcoming events

We have some events coming up soon, both for communicators:

- Staying Alert to Misinformation in the Media: A Guide for PR Pros

Panel discussion with Baltimore Public Relations Council

January 21, 2025, 9:00-10:30 am – Register here - Navigating a Polluted Information Ecosystem:

Tips for Communicators

Talk for IABC DC chapter

February 11, 2025 at noon – Register here

In other news

- Not to be outdone, Google announced that it's refusing to add fact-checks to search results and YouTube videos despite requirements by the European Union's Code of Practice on Disinformation to do so, Axios' Sara Fischer reports. Google is a signatory to the code, but took this step regardless.

- Media Matters for America selected anti-media intimidation as its 2024 “Misinformer of the Year” for 2024. Previous winners include legacy media (2023), former Fox News host Tucker Carlson (2022), insurrection coup plotter Steve Bannon (2021), right-wing propaganda network Fox News (2020), conservative columnist John Solomon (2019), and – oh look – Facebook CEO Mark Zuckerberg (2018).

Follow us on Instagram @truth_incommon

LinkedIn | Threads: @dtroust | Twitter

Our mailing address is P.O. Box 21456, Washington, DC 20009

Copyright (c) 2024 Truth in Common. All rights reserved.