Misinformation at work

Amid the almost daily upsets in cable news and politics, last Friday Truth in Common sponsored a virtual event called Misinformation & the Workplace: Mitigating the Risks. Part of National Week of Conversation, the panel, moderated by yours truly, featured some great speakers:

- Dave Fleet, head of global digital crisis, Edelman

- Jillian Youngblood, executive director, Civic Genius

- Andy Slaughter, corporate and workplace communications, Amazon

We had an engaged group of 35+ folks. But it was a free, Friday, lunchtime event and a bunch registered but didn't join, so I summarized the event – below – for the benefit of all.

First, though, the news.

Breaking news

- On Twitter, Brian Stelter shared his take on Tucker Carlson's firing by breaking down a Wall Street Journal story posted an hour ago. The story is paywalled and I can no longer embed tweets 😡 so if you can't see the linked thread, here's a screengrab that gives you an inkling:

- Others are responding to Carlson's ouster by revealing his methods of persuasion. The Daily episode from yesterday (Tues. 4/25) discussed themes he came back to repeatedly, like the Great Replacement theory, along with his selective editing of January 6 footage to show peaceful tourism. Similarly, my piece on critical race theory from last November dissects one Carlson broadcast to show how he defined a term no one was familiar with then went on to stoke fear about it.

- Disney joins Dominion Voting Systems in challenging targeted attacks and defamation in court:

Note that Courier is a group of state-based newsrooms in Arizona, Florida, Iowa, Michigan, North Carolina, Pennsylvania, Virginia and Wisconsin. Check them out!

- Did you see the RNC's video response to President Biden's campaign announcement? Axios revealed this morning that it was entirely generated by AI. I just posted about it in the Circle community, which I use for news and engagement among workshop attendees:

The RNC created the video using artificial intelligence (AI) software to show a dystopian reality riddled with crime and crisis if President Biden is re-elected. In a way, it's a great example of AI's risks -- because in our quick-consumption culture, will people see these images as fake and imagined, or see them as real? Perception, unfortunately, is reality.

Here's Axios's take. Here's the video itself.

Misinformation & the Workplace:

Experts weigh in

In the April 21 panel discussion, we first aligned on definitions:

- Misinformation – false information that's shared in an unintentional way; i.e., absent of the intentions mentioned below. (Misinformation is also sometimes used as an umbrella term for the three terms defined here, or shorthand for the phenomena overall.)

- Disinformation – false information that's shared deliberately, with the intent to mislead, cause confusion or chaos, make money, forward a political agenda, or inflict harm to an individual, group, institution etc.

- Malinformation – information that's based on reality but used to inflict harm on an individual, group, institution etc.

We then went on to discuss how falsehoods show up at work and what communications and other experts are doing about it.

How do mis- and disinformation show up in the workplace?

The unchecked spread of falsehoods in and around the workplace can cause employee discord and polarization; rumors about the organization, both benign and less so, that travel internally or publicly; distrust in management or major changes that ignite the rumor mill; campaigns by disgruntled employees and more.

Speakers noted broader, systems issues as well, such as:

- Declining trust in institutions – "It's hard, today, to find a trusted messenger for your audience."

- A sense of unfairness among the populace

- Entrenched divisions and the "exhausted majority"

- Screen addiction that doubled during the pandemic

For more on systems thinking, consider David Stroh's book Systems Thinking for Social Change. David kicked off National Week of Conversation with a talk on April 17.

What can we do about it?

Speakers noted the following:

- Andy said he works to find the "ground truths" relevant to the situation. Everything's shareable, he said, and leaders should "consider every word of the message you're going to put out" -- and the channels through which it will travel.

- Dave flagged that workplaces are viewed as trusted institutions and an opportunity to address misinformation and polarization: "The workplace is the one place we can't self-select" into viewpoint-specific silos or bubbles. He also mentioned the ABCs of disinformation (bad Actors, deceptive Behaviors, harmful Content), a framework developed by Camille François of Graphika for confirming that something is, in fact, disinformation.

- Deanna shared how neuroscience can help us understand how misinformation spreads -- for example, when people share a post because it's "interesting if true."

- Jillian talked about citizen problem-solving programs that bridge divides and help people realign on the issues, and the importance of having personal conversations in a non-confrontational setting, e.g., having coffee or a beer together.

Here's a screen-based example of Jillian's comment: The now-well-known 2017 Heineken commercial that shows folks with different viewpoints doing a project together, answering questions then having a beer. It was tested by Stanford's Polarization and Social Change Lab along with hundreds of other mini-interventions and found to be effective in diminishing partisan animosity

Who's on the front lines in an organization?

Much of the work to address mis- and disinformation takes place among corporate or crisis communications teams or partners; risk assessment and crisis planning are key to mitigating the effects, both proactively and reactively. Andy urged that we break down silos and ensure that internal and external communications teams, as well as communicators and non-communicators, are working together – not just in a crisis, but all the time.

Dave said that ESG and DEI teams can play a role in addressing these issues – I'd add HR as well. He also pointed to the need for workshops on civic and media literacy training (like Truth in Common).

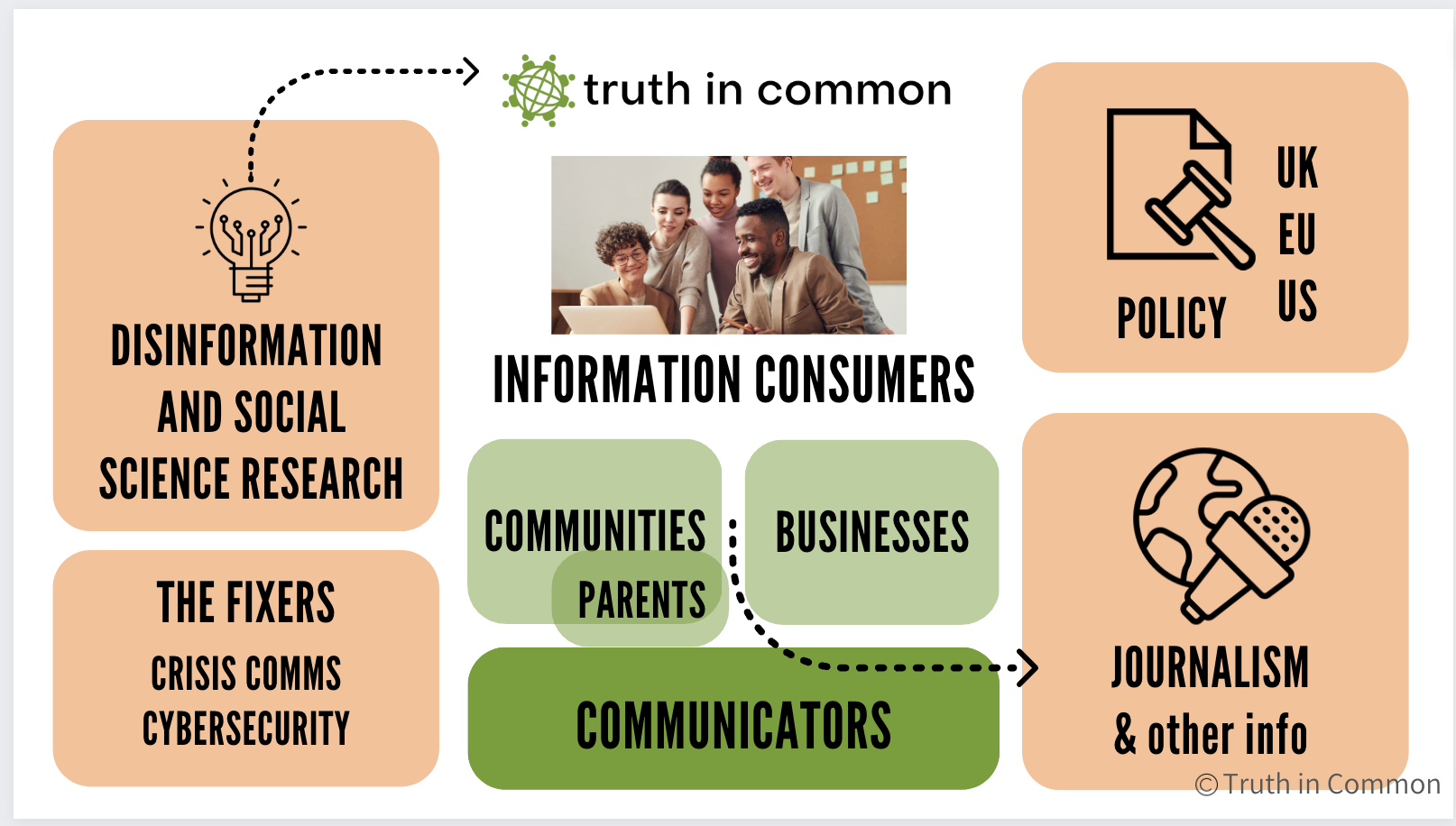

A social change approach

At the outset, I shared the need for a multi-faceted social change approach to this issue that centers information consumers and compliments work being done among policymakers and the tech community. It involves distilling research into user-friendly skills, revealing the link between falsehoods and polarization – a pain point for many – and creating community on this issue. The goal is for people to feel equipped and motivated to upgrade their information consumption habits – for example, by noticing emotional reactions to content and expanding their sources – but also to feel part of a movement of sorts in which people and organizations take a stand for the truth. This is the Truth in Common approach.

Audience input

As always, participants chimed in with great insights and questions, mentioning that:

- We should learn from how truth and falsehoods are delineated in court proceedings

- AI is turning up the volume on memes, which will be an escalating issue going forward. When asked whether prebunking helps, speakers shared it was a technique that's more nascent in the literature but has promise. Another mentioned the need to foster healthy skepticism and start young in educating kids on digital literacy.

- Client organizations sometimes haven't articulated their values. Speakers agreed that this is a problem and a participant mentioned the Barrett Values Model -- the gap between organizational and individual values.

- We should assess the threat from mis- or disinformation and ask, is this a problem that needs addressing?

- Intercultural communications is its own challenge in many workplaces, and a complicating factor in addressing mis- and disinformation. -- and recommended the book Ordinary Men.

- Snapchat's "My AI" chatbot has already been rolled out to all users. Here's a piece on the platform's spike in 1-star reviews.

Viewers also shared misinformation stories that ranged from the funny – the Pope in Balenciaga – to the tragic: Euronews reported on a man who ended his life after an AI chatbot 'encouraged' him to sacrifice himself to stop climate change.

Shared resources

- An example of working across differences for a solution, from Jillian

- A piece on generative AI lowering barriers to entry for disinformation purveyors that Dave wrote for PR News

- It was a fun and insightful way to spend a lunchtime.

Watch this space for more event announcements!

Take care,

Deanna